Key Takeaways

- A reinforcement schedule is a rule stating which instances of behavior, if any, will be reinforced.

- Reinforcement schedules can be divided into two broad categories: continuous schedules and partial schedules (also called intermittent schedules).

- In a continuous schedule, every instance of a desired behavior is reinforced, whereas partial schedules only reinforce the desired behavior occasionally.

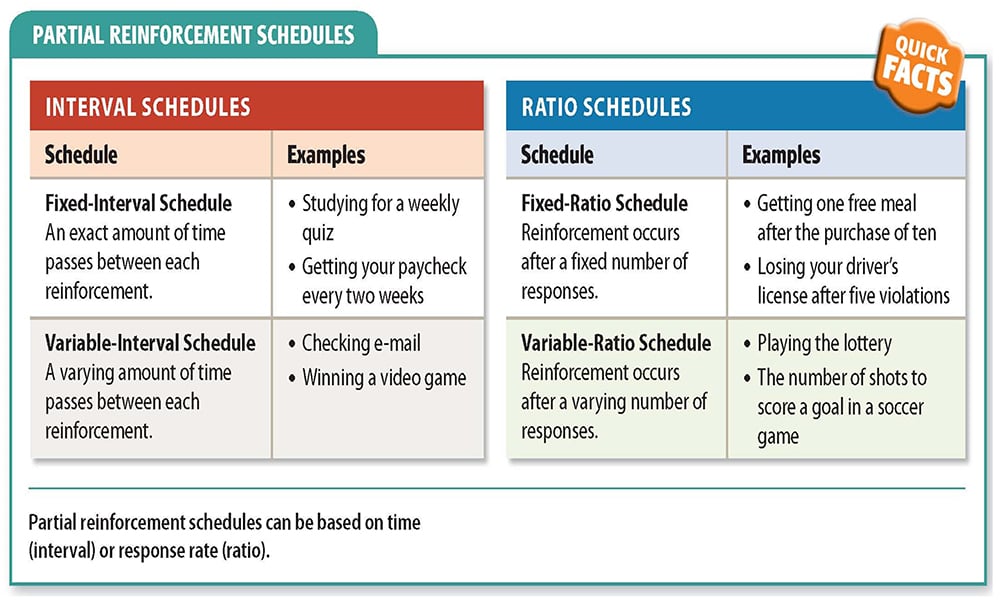

- Partial reinforcement schedules are described as either fixed or variable, and as either interval or ratio.

- Combinations of these four descriptors yield four kinds of partial reinforcement schedules: fixed-ratio, fixed-interval, variable-ratio, and variable-interval.

In 1957, a revolutionary book for the field of behavioral science was published: Schedules of Reinforcement by C.B. Ferster and B.F. Skinner.

The book described that organisms could be reinforced on different schedules and that different schedules resulted in varied behavioral outcomes.

Ferster and Skinner’s work established that how and when behaviors were reinforced carried significant effects on the strength and consistency of those behaviors.

Introduction

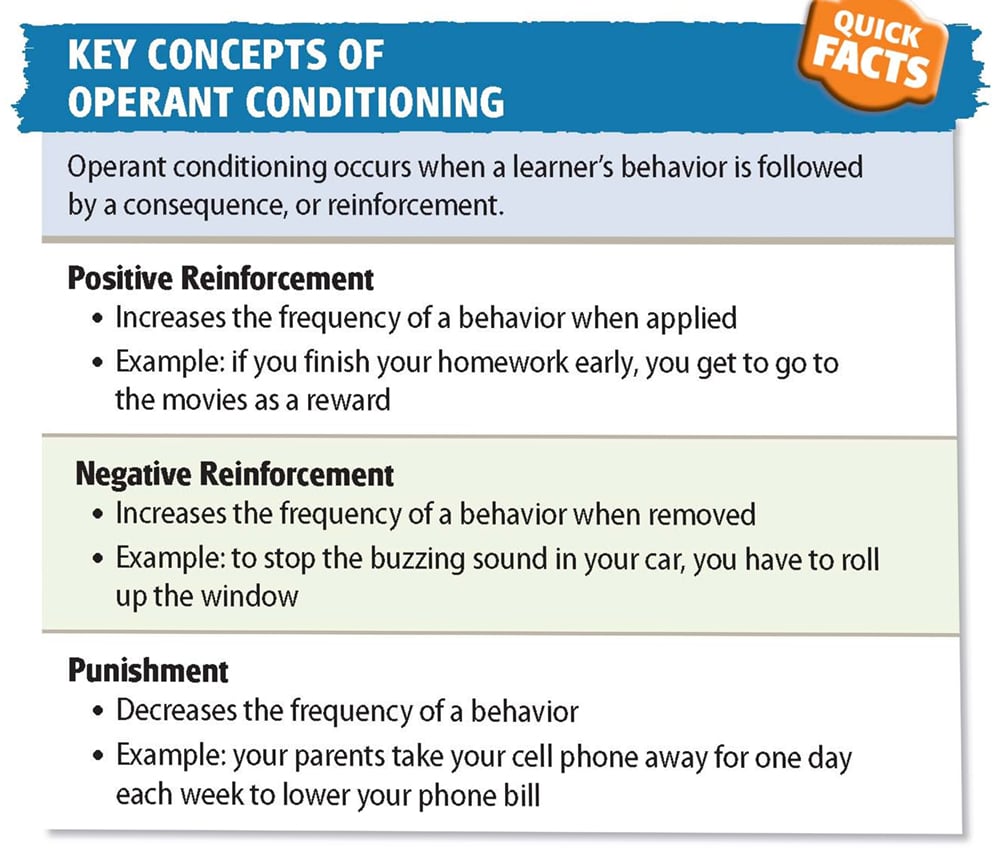

A schedule of reinforcement is a component of operant conditioning (also known as ininstrumental conditioning). It consists of an arrangement to determine when to reinforce behavior. For example, whether to reinforce in relation to time or number of responses.

Schedules of reinforcement can be divided into two broad categories: continuous reinforcement, which reinforces a response every time, and partial reinforcement, which reinforces a response occasionally.

The type of reinforcement schedule used significantly impacts the response rate and resistance to the extinction of the behavior.

Research into schedules of reinforcement has yielded important implications for the field of behavioral science, including choice behavior, behavioral pharmacology, and behavioral economics.

Continuous Reinforcement

In continuous schedules, reinforcement is provided every single time after the desired behavior.

Due to the behavior being reinforced every time, the association is easy to make, and learning occurs quickly. However, this also means that extinction occurs quickly after reinforcement is no longer provided.

For Example

We can better understand the concept of continuous reinforcement by using candy machines as an example.

Candy machines are examples of continuous reinforcement because every time we put money in (behavior), we receive candy in return (positive reinforcement).

However, if a candy machine were to fail to provide candy twice in a row, we would likely stop trying to put money in (Myers, 2011).

We have come to expect our behavior to be reinforced every time it is performed and quickly grow discouraged if it is not.

Partial (Intermittent) Reinforcement Schedules

Unlike continuous schedules, partial schedules only reinforce the desired behavior occasionally rather than all the time. This leads to slower learning since it is initially more difficult to make the association between behavior and reinforcement.

However, partial schedules also produce behavior that is more resistant to extinction. Organisms are tempted to persist in their behavior in hopes that they will eventually be rewarded.

For instance, slot machines at casinos operate on partial schedules. They provide money (positive reinforcement) after an unpredictable number of plays (behavior). Hence, slot players are likely to continuously play slots in the hopes that they will gain money in the next round (Myers, 2011).

Partial reinforcement schedules occur the most frequently in everyday life, and vary according to the number of responses rewarded (fixed or variable) or the time gap (interval or ratio) between response.

Fixed Schedule

Variable Schedule

Ratio Schedule

Interval Schedule

Combinations of these four descriptors yield four kinds of partial reinforcement schedules: fixed-ratio, fixed-interval, variable-ratio and variable-interval.

Fixed Interval Schedule

In operant conditioning, a fixed interval schedule is

when reinforcement is given to a desired response after specific (predictable) amount of time has passed.

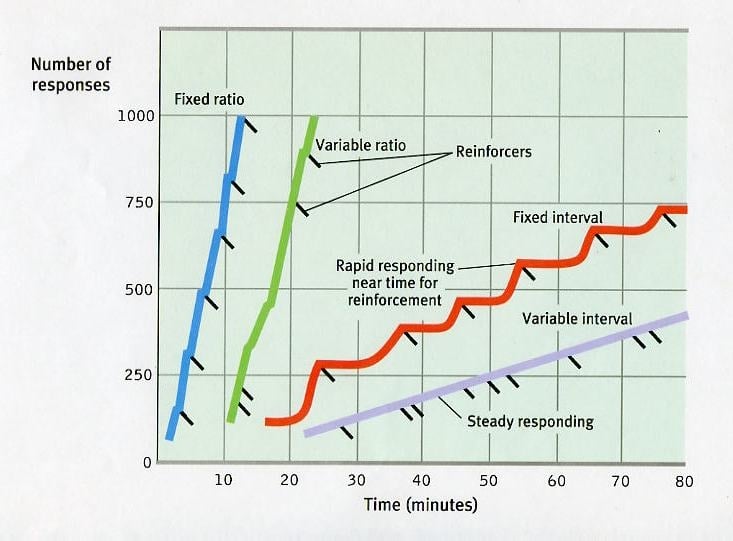

Such a schedule results in a tendency for organisms to increase the frequency of responses closer to the anticipated time of reinforcement. However, immediately after being reinforced, the frequency of responses decreases.

The fluctuation in response rates means that a fixed-interval schedule will produce a scalloped pattern rather than steady rates of responding.

For Example

An example of a fixed-interval schedule would be a teacher giving students a weekly quiz every Monday.

Over the weekend, there is suddenly a flurry of studying for the quiz. On Monday, the students take the quiz and are reinforced for studying (positive reinforcement: receive a good grade; negative reinforcement: do not fail the quiz).

For the next few days, they are likely to relax after finishing the stressful experience until the next quiz date draws too near for them to ignore.

Variable Interval Schedule

In operant conditioning, a variable interval schedule is when the reinforcement is provided after a random (unpredictable) amount of time has passes and following a specific behavior being performed.

This schedule produces a low, steady response rate since organisms are unaware of the next time they will receive reinforcers.

For Example

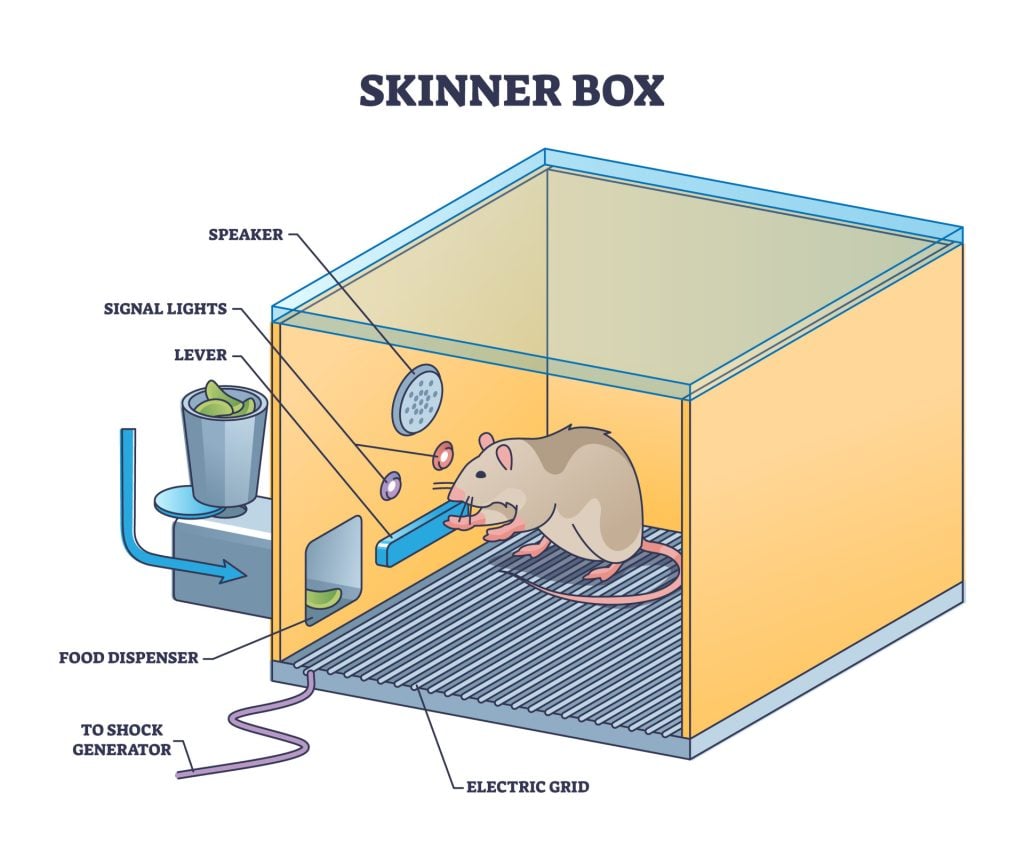

A pigeon in Skinner’s box has to peck a bar to receive a food pellet. It is given a food pellet after varying time intervals ranging from 2-5 minutes.

It is given a pellet after 3 minutes, then 5 minutes, then 2 minutes, etc. It will respond steadily since it does not know when its behavior will be reinforced.

Fixed Ratio Schedule

In operant conditioning, a fixed-ratio schedule reinforces behavior after a specified number of correct responses.

This kind of schedule results in high, steady rates of response. Organisms are persistent in responding because of the hope that the next response might be one needed to receive reinforcement. This schedule is utilized in lottery games.

For Example

An example of a fixed-ratio schedule would be a dressmaker is being paid $500 after every 10 dresses that they make. After sending off a shipment of 10 dresses, they are reinforced with $500. They are likely to take a short break immediately after this reinforcement before they begin producing dresses again.

Variable Ratio Schedule

A variable ratio schedule is a schedule of reinforcement where a behavior is reinforced after a random number of responses.

This kind of schedule results in high, steady rates of response. Organisms are persistent in responding because of the hope that the next response might be one needed to receive reinforcement. This schedule is utilized in lottery games.

For Example

An example of a fixed-ratio schedule would be a child being given candy for every 3-10 pages of a book they read. For example, they are given candy after reading 5 pages, then 3 pages, then 7 pages, then 8 pages, etc.

The unpredictable reinforcement motivates them to keep reading, even if they are not immediately reinforced after reading one page.

Response Rates of Different Reinforcement Schedules

Ratio schedules – those linked to a number of responses – produce higher response rates compared to interval schedules.

As well, variable schedules produce more consistent behavior than fixed schedules; the unpredictability of reinforcement results in more consistent responses than predictable reinforcement (Myers, 2011).

Extinction of Responses Reinforced at Different Schedules

Resistance to extinction refers to how long a behavior continues to be displayed even after it is no longer being reinforced. A response high in resistance to extinction will take a longer time to become completely extinct.

Different schedules of reinforcement produce different levels of resistance to extinction. In general, schedules that reinforce unpredictably are more resistant to extinction.

Therefore, the variable-ratio schedule is more resistant to extinction than the fixed-ratio schedule. The variable-interval schedule is more resistant to extinction than the fixed-interval schedule as long as the average intervals are similar.

In the fixed-ratio schedule, resistance to extinction increases as the ratio increases. In the fixed-interval schedule, resistance to extinction increases as the interval lengthens in time.

Out of the four types of partial reinforcement schedules, the variable-ratio is the schedule most resistant to extinction. This can help to explain addiction to gambling.

Even as gamblers may not receive reinforcers after a high number of responses, they remain hopeful that they will be reinforced soon.

Implications for Behavioral Psychology

In his article “Schedules of Reinforcement at 50: A Retroactive Appreciation,” Morgan (2010) describes the ways in which schedules of reinforcement are being used to research important areas of behavioral science.

Choice Behavior

behaviorists have long been interested in how organisms make choices about behavior – how they choose between alternatives and reinforcers. They have been able to study behavioral choice through the use of concurrent schedules.

Through operating two separate schedules of reinforcement (often both variable-interval schedules) simultaneously, researchers are able to study how organisms allocate their behavior to the different options.

An important discovery has been the matching law, which states that an organism’s response rates to a certain schedule will closely follow the ratio that reinforcement has been obtained.

For instance, say that Joe’s father gave Joe money almost every time Joe asked for it, but Joe’s mother almost never gave Joe money when he asked for it. Since Joe’s response to asking for money is reinforced more often when he asks his father, he is more likely to ask his father rather than his mother for money.

Research has found that individuals will try to choose behavior that will provide them with the largest reward. There are also further factors that impact an organism’s behavioral choice: rate of reinforcement, quality of reinforcement, delay to reinforcement, and response effort.

Everyone prefers higher amounts, quality, and rates of reward. They prefer rewards that come sooner and require less overall effort to receive.

Behavioral Pharmacology

Schedules of reinforcement are used to evaluate preference and abuse potential for drugs. One method used in behavioral pharmacological research to do so is through a progressive ratio schedule.

In a progressive ratio schedule, the response requirement is continuously heightened each time after reinforcement is attained. In the case of pharmacology, participants must demonstrate an increasing number of responses in order to attain an injection of a drug (reinforcement).

Under a progressive ratio schedule, a single injection may require up to thousands of responses. Participants are measured for the point where responding eventually stops, which is referred to as the “break point.”

Gathering data about the breakpoints of drugs allows for a categorization mirroring the abuse potential of different drugs. Using the progressive ratio schedule to evaluate drug preference and/or choice is now commonplace in behavioral pharmacology.

Behavioral Economics

Operant experiments offer an ideal way to study microeconomic behavior; participants can be viewed as consumers and reinforcers as commodities.

Through experimenting with different schedules of reinforcement, researchers can alter the availability or price of a commodity and track how response allocation changes as a result.

For example, changing the ratio schedule (increasing or decreasing the number of responses needed to receive the reinforcer) is a way to study elasticity.

Another example of the role reinforcement schedules play is in studying substitutability by making different commodities available at the same price (same schedule of reinforcement). By using the operant laboratory to study behavior, researchers have the benefit of being able to manipulate independent variables and measure the dependent variables.

Mini Quiz

Below are examples of schedules of reinforcement at work in the real world. Read the examples and then determine which kind of reinforcement schedule is being used.

- Answer: Variable-interval

- Answer: Continuous reinforcement schedule

References

Ferster, C. B., & Skinner, B. F. (1957). Schedules of reinforcement. New York: Appleton-Century-Crofts.

Morgan, D. L. (2010). Schedules of Reinforcement at 50: A Retrospective Appreciation. The Psychological Record; Heidelberg, 60 (1), 151–172.

Myers, David G. (2011). Psychology (10th ed.). Worth Publishers.

What Influences My Behavior? The Matching Law Explanation That Will Change How You Understand Your Actions. (2017, August 27). Behaviour Babble. https://www.behaviourbabble.com/what-influences-my-behavior/

What are schedules of reinforcement?

Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. They include fixed-ratio, variable-ratio, fixed-interval, and variable-interval schedules, each dictating a different pattern of rewards in response to a behavior.

Which schedule of reinforcement is most resistant to the extinction of learned responses?

The variable-ratio schedule of reinforcement is the most resistant to extinction. This is because the reinforcement is given after an unpredictable number of responses, making it more difficult for the behavior to cease. Examples include gambling or lottery games, where a win is unpredictable but can occur anytime.